Amazon Web Services (AWS) is a US company owned by the Amazon group, which provides cloud computing services on a platform of the same name on demand. It announced innovations in its portfolio of machine learning services for make generative AI more accessible to its customers.

Amazon Web Services: More Affordable Generative AI Goal

The premises why the machine learning (ML) to become a must-have technology have been around for decades. But the latest innovations, such as better scalable processing capacity, the proliferation of data, and the rapid advancement of ML technologies, have made it possible to truly accelerate the adoption of ML and AI across all economic sectors.

Just recently, generative AI applications like ChatGPT have captured attention, demonstrating that we are indeed at an exciting tipping point in the large-scale adoption of ML.

Amazon Web Services, how to ease the process of reinventing most operations with generative AI

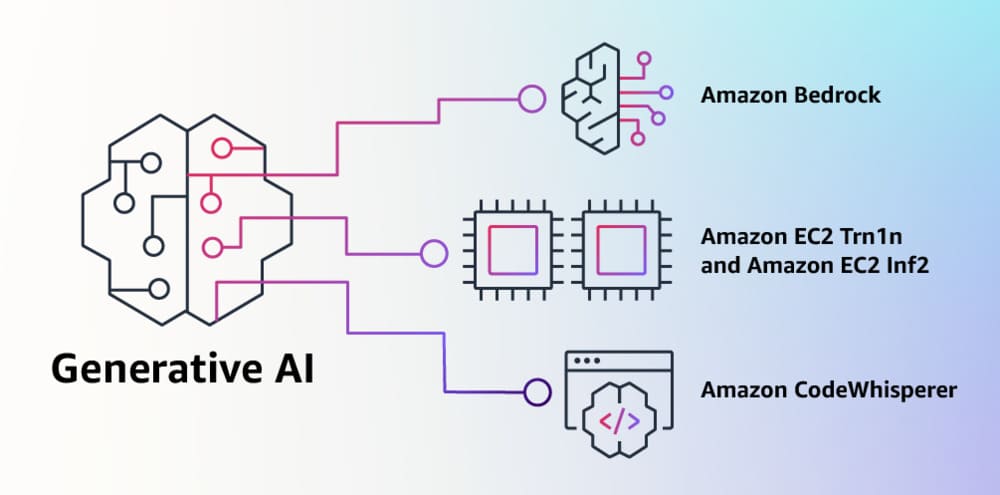

To facilitate this process and allow the businesses to reinvent most of their operations with Generative AI, AWS has announced a series of initiatives.

Amazon Bedrock: Easily build and scale generative AI applications.

This option is a new service for building and scaling generative AI applications, i.e. applications that can generate text, images, audio, and synthetic data in response to a prompt. Amazon Bedrock offers customers easy access to foundation models (FMs), large ML models upon which Generative AI is based, from top AI startup vendors, and exclusive access to servizi Titan developed by AWS. With Amazon Bedrock, AWS customers have the flexibility and ability to use the best models for their specific needs.

Reduce the cost of running Generative AI workloads

I chip AWS Inferentia they offer the most energy efficiency and lowest cost for running demanding Generative AI inference workloads (such as running models and answering queries in production) at scale. There reduction of costs and energy consumption makes generative AI more accessible to a broad range of customers.

Custom silicon to train models faster

The new Trn1n instances (the server resource where the processing happens and which, in this case, runs on custom Trainium chips from AWS) offer a huge network capacity, which is essential for training these models quickly and efficiently. Developers will thus be able to train models faster and less expensively, ultimately leading to an increase in services based on generative AI models.

Real-time assistance for coding

Amazon CodeWhisperer uses generative AI to make code suggestions in real time, based on user comments and their previous code. Individual developers can access Amazon CodeWhisperer for free, with no usage limits, but paid options are also available for professional use.

Leave a Reply

View Comments