Cerebras Systems sets the record for the largest AI models ever trained on a single device, with the new Cerebras WSE-2

The Cerebras Systems (here for more information), is a pioneer company in computing artificial intelligence (AI) with high performance. Today, it announced for the first time ever, the ability to train models with up to 20 billion parameters on a single system CS-2. An impossible feat on any other single device. By allowing a single CS-2 to train these models, Cerebras reduces the system engineering time required to run large natural language processing (NLP) models from months to minutes. It also eliminates one of the most painful aspects of NLPwhich is the partitioning of the model among hundreds or thousands of small graphics processing units (GPUs).

Statements

The following are the first statements regarding the new Cerebars WSE-2.

In NLP, the larger models prove to be more accurate. But traditionally, only a very select few companies had the resources and experience to do the painstaking work of breaking down these large models and spreading them across hundreds or thousands of graphics processing units.

he has declared Andrew Feldman, CEO and co-founder of Cerebras Systems. Which adds

As a result, only a very few companies could train large NLP models. It was too expensive, time consuming and inaccessible to the rest of the industry. Today we are proud to democratize access to GPT-3 1.3B, GPT-J 6B, GPT-3 13B and GPT-NeoX 20B. Allowing the entire AI ecosystem to set up large models in minutes and train them on a single CS-2.

Kim BransonSVP of Artificial Intelligence and Machine Learning at GSK, says that

GSK generates extremely large datasets through its genomics and genetic research, and these datasets require new equipment to conduct machine learning.

He then added

Cerebras CS-2 is a key component that allows GSK to train language models using biological datasets on a previously unattainable scale and size. These fundamental models form the basis of many of our artificial intelligence systems and play a vital role in the discovery of medicinal transformations.

Operation of the new Cerebras WSE-2

These world’s first capabilities are made possible by a combination of the size and computing resources available in the Cerebras Wafer Scale Engine-2 (WSE-2). And obviously also from the software architecture extensions Weight Streaming available through the release of the version R1.4 of the Cerebras software platform, CSoft. When a model fits on a single processor, AI training is easy. But when a model has more parameters than can fit in memory, or a layer requires more processing than a single processor can handle, complexity explodes. The model must be split and spread across hundreds or thousands of GPUs. This process is painful and often takes months to complete. To make matters worse, the process is unique to each pair of network compute clusters, so the work is not portable to different compute clusters or neural networks. It is entirely made to measure.

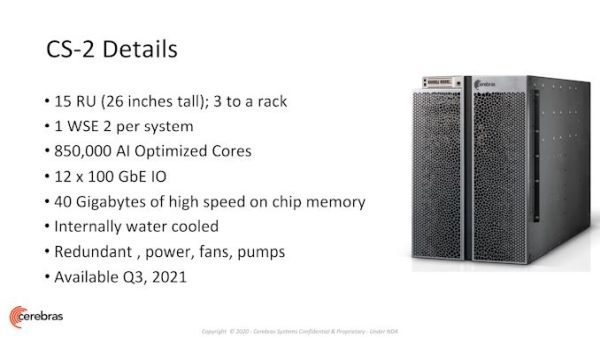

Largest processor ever built in the world

The Cerebras WSE-2 is the largest processor ever built. IS 56 times biggerha 2.55 trillion more transistors e has 100 times more processing cores than the larger GPU. The size and computing resources of the WSE-2 allow even the largest neural networks to adapt at every level. Architecture Cerebras Weight Streaming disaggregates memory and computation by allowing memory (used to store parameters) to grow separately from computation. So a single CS-2 can support models with hundreds of billions, even trillions of parameters.

Details

Graphics processing units, on the other hand, have a fixed amount of memory for GPU. If the model requires more parameters than will fit the memory, then you need to buy more graphics processors and then spread the work across multiple GPUs. The result is an explosion of complexity. The Cerebras solution is much simpler and more elegant. By disaggregating the computation from memory, the Weight Streaming architecture enables support for models with any number of parameters to run on a single CS-2.

Powered by the computing power of the WSE-2 and the architectural elegance of the Weight Streaming architecture, Cerebras is able to support, on a single system, the largest NLP networks. By supporting these networks on a single CS-2, Cerebras reduces setup time within minutes and allows for model portability. You can go from GPT-J to GPT-Neo, for example, with just a few keystrokes, a task that would take months to design on a cluster of hundreds of GPUs.

Customers

With customers in North America, Asia, Europe and the Middle East, Cerebras delivers industry-leading artificial intelligence solutions a un elenco crescente di clienti nei segmenti Enterprise, Government e High Performance Computing (HPC). Tra cui GlaxoSmithKline, AstraZeneca, TotalEnergies, nference, Argonne National Laboratory, Lawrence Livermore National Laboratory, Pittsburgh Supercomputing Center. Ed ancora Leibniz Supercomputing Centre, National Center for Supercomputing Applications, Edinburgh Parallel Computing Center (EPCC), National Energy Technology Laboratory e Tokyo Electron Devices.

For more information on the Cerebras software platform, please visit.

And you? what do you think of this new Cerebras WSE-2? tell us yours below in the comments and stay connected on TechGameWorld.com, for the latest news from the world of technology (and more!).

The article New Cerebras WSE-2: record model comes from TechGameWorld.com.

Leave a Reply

View Comments