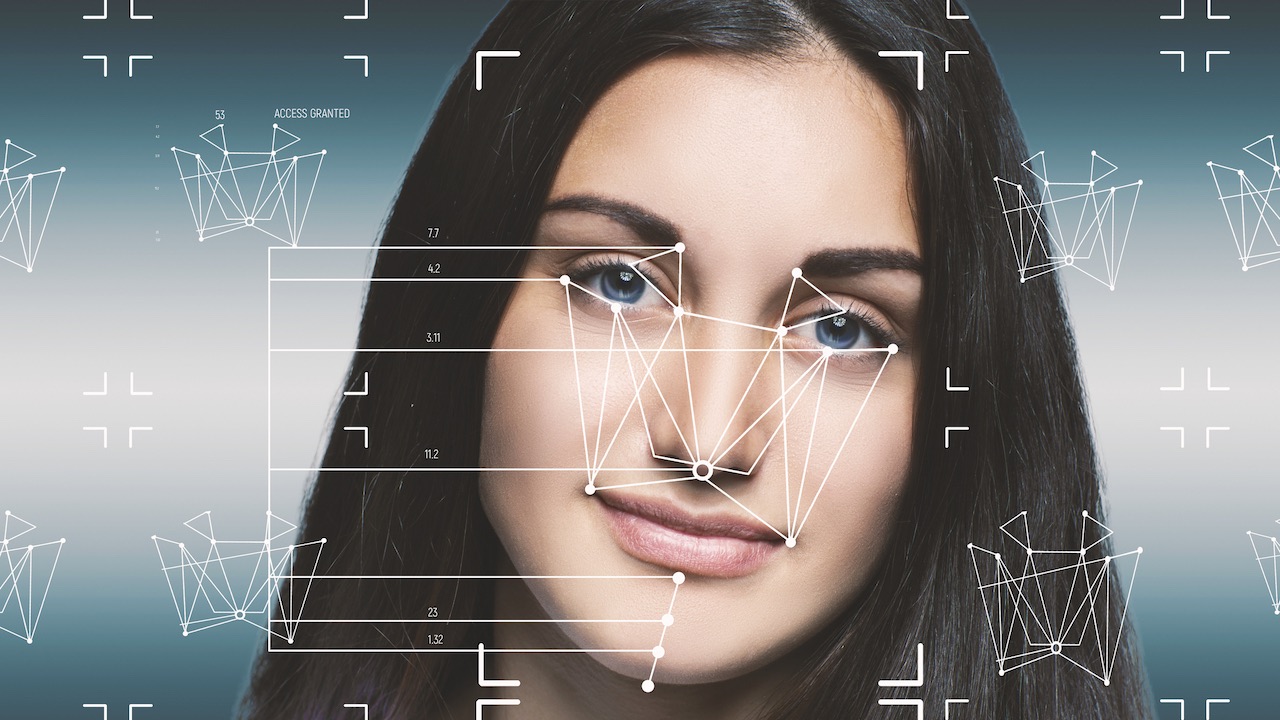

Women, algorithms, social networks and gender bias.

Il women’s body is sexualized on social networks much more than that of men especially if the published content portrays women who play sports or pregnant women. This is one of the most staggering discoveries he has made Gianluca Maurolecturer, researcher and founder of AI Academy, together with journalist Hilke Schellmann.

Together they wrote and edited an important investigation published in the Guardian entitled “There is no standard“which demonstrated and highlighted how certain photos, which portray women in poses or postures that are anything but sexual on social networks, are sexualized by the artificial intelligence that evaluates the published contents, with the result that these are then consequently shadowbanned or censored.

Why is this happening? Pictures posted on social media come analyzed by artificial intelligence algorithms they decide what to amplify and what to suppress, and many of these algorithms are gender biased. Prejudice that was discovered and then investigated by Gianluca Mauro who tested these AI tools to analyze hundreds of photos of men and women in underwear, during training or with partial nudity, perhaps related to medical exams, and found evidence that AIs classify as sexually suggestive photos of women in everyday situations.

Images of women are rated as sexually suggestive compared to similar images of men. As a result, social media platforms using these algorithms reduce the visibility of countless images showing women’s bodies, causing damage to women-run businesses and further amplifying social inequalities.

Algorithms are sexist. It’s time for Big Tech to do something

The use of AI algorithms in social media has the potential to positively transform our online experience, but only if such tools are used responsibly and ethically.

Addressing this issue requires a collective effort, involving both the platforms themselves and the legislators, in order to guarantee a fair and non-discriminatory representation of women in the digital environment. Only through concrete action can we build an inclusive online environment that promotes gender equality and fights discrimination perpetuated by AI algorithms and those who manage them.

Discover the Disney+ world for your favorite TV series

Social media platforms have a responsibility to ensure that their AI algorithms are free from gender bias and discrimination. It is essential that these companies invest in diversity and inclusion in the design of their algorithms and that they are transparent in how they operate. Measures should be taken to monitor and correct any bias in AI systems, so as to avoid the spread of sexualized and discriminatory representations of women.

We talked about it with Gianluca Mauro who told us how his investigation took place more closely.

Gianluca Mauro

Gianluca Mauro

First of all I ask you what you do, and if you explain your work with AI.

I am the founder and CEO of a company called AI Academy. What we do is training for people who have no technical skills in the world of artificial intelligence. We believe that for AI to develop in a positive direction there is a need for more people of different types working in the AI world, to increase the diversity in the AI world a bit; I mean diversity in an extremely broad way, therefore I don’t mean only diversity of gender, of ethnicity, but also of background, of education and studies. We have students who are chefs, designers, architects, doctors. So we truly help anyone trying to enter this world here.

Tell me about your investigation which was later published by the Guardian, how it came about and what prompted you for so many months to confront such a complex research and discovery.

By chance one day I posted a photo on LinkedIn (part of my job is to raise awareness, try to raise awareness of how these technologies work). I note that this post strangely had not been seen by anyone. I had about twenty views in an hour and I normally get a thousand views in an hour: so there was this crazy difference in the diffusion of the content which piqued my curiosity a bit. As a technical figure, therefore as a person who knows and builds algorithms, I was intrigued.

The first thing I did was to do a trivial experiment on LinkedIn, which was to repost the same text by changing the photo. The photo in the first post had two clothed women sitting on a bed. A very calm photo, there was nothing sexual in that photo.

After removing it, I put another photo and the post, therefore the same text but with a different photo, had 850 views, against the 29 of the first post.

Our interview with Gianluca Mauro, founder of AI Academy

So I figured the problem was definitely the photo. There was something in the photo that didn’t fit. So I made this assumption. There are algorithms that moderate pornographic content. There are algorithms that block this content. My guess was, given this evidence, maybe there are other algorithms that aren’t focused on blocking pornography, but on suppressing anything sensual, non-sexual, meaning anything that’s legally permitted, that anyone can publish, but that maybe LinkedIn doesn’t want to have it on the platform. This was the hypothesis.

And from there I began to do research using the tools I knew. I knew that Microsoft, Google, Amazon were selling algorithms to companies. I saw if there were any algorithms to make this calculation of “the sensuality of an image”. There were algorithms to test, they were on sale, and I started trying them.

When I discovered the first problems I immediately thought of writing to Microsoft. The problem was mostly Microsoft’s (LinkedIn is owned by Microsoft). I looked up the name of their Ethics Director on LinkedIn, Natasha Crampton. I sent her a message. She replied immediately.

He told me “send me the photos”, “My team is working on it”. For a couple of weeks she sent me at least one email a day. And then at one point she told me “everything is fine”, we did our tests, everything is fine, there is no problem, don’t worry ”.

What kind of tests did they run?

They didn’t tell me what tests they did. We have clues. They said that it seemed that the problem was in the composition of the image, in the sense that in the image I had given them there were two women close together and they said that those two bodies, one next to the other, was the core of the problem .

I tried to imagine what they did: there’s a test called saliency map, which basically shows you on an image which elements “excited” an algorithm; but this is not the best method to test a problem of this kind, it is the more technical, easier method.

Some time later I went to check if the problem was solved or not. And I found that the problem was still there. So I thought of writing to a journalist. I looked up this journalist named Hilke Schellmann, who had already written about artificial intelligence and bias in another area, that of hiring, so she was testing algorithms that screen candidates for jobs, and I really liked her work . I wrote her on LinkedIn and she wrote back and we started working together.

Women, algorithms, social networks and gender bias

Photograph: Dragos Gontariu/Unsplash

Photograph: Dragos Gontariu/Unsplash

Then this investigation started and the article was published in February 2023, therefore after more or less a year and a half of work. The point is that we also had to understand who had been damaged by this problem. Which is not easy to find out.

Philosophically we understand this; it is obvious that it is a problem that photos of female bodies are suppressed on Instagram. But we also wanted to understand concretely if there was someone or more than someone who had received material damage, as well as moral. So we talked to people who do photography, who do fitness, and some crazy examples came up.

We found that the category of pregnant women is the category that most “excited” these algorithms. Excite I use it in the technical sense, in the sense that the numbers shoot up. It seems they were turned on by women and anything related to femininity, so bras, long hair, pumps, stuff like that, and exposed skin. Lots of exposed skin.

I’ve talked to photographers who only take photos of pregnant women who were failing because all content was being blocked by social media, so this kind of work helped us bring a sea of issues to light.

It’s like artificial intelligence sort of decides which voice should be heard.

Let us remember that there are human beings behind artificial intelligence. It is these people who build the algorithms. Algorithms actually do something very simple: you make a request and tell them to learn how to classify sensual and non-sensual photos. They will find any way to…

Leave a Reply

View Comments